We have several AX7 environments (named “Dynamics 365 for Operations” at the time of this last post update), in production.

If you want to learn more about this story :

The New Dynamics AX at Emile Egger

…or directly to the video :

Youtube video about AX at Emile Egger

At some point, we wanted to have the production database available on a single-box environment for development and testing. This is how you can do it.

Summary of the procedure

- Request UAT (testing environment) database refresh : operated by Microsoft

- Make a copy of the refreshed UAT database, prepare it

- Export the UAT database to a dev environment

- Restore on dev environment, finish with some SQL

Approximate time required : 2 days.

Please note that LCS setup and environments may be different, based on your organization.

Important : new post from Microsoft

You can now find better and more comprehensive documentation here : https://docs.microsoft.com/…

Thank you Microsoft 🙂

If like me, you still find this procedure long and unpleasant, here are some Powershell scripts that are a good base to automate the process :

Warning : they are not state-of-the-art (by far), but they do the job.

You will have to open them all and adapt the content before you run them (I did not even bother to use variables). Please share better scripts if you improve them 🙂

Step 1 : Refresh your “SANDBOX: STANDARD ACCEPTANCE TEST” environment from your “PRODUCTION” environment

Please note that in this post, I refer to “SANDBOX: STANDARD ACCEPTANCE TEST” simply as “UAT”, short for User Acceptance Test.

In this step, we will ask Microsoft to copy the production database for us.

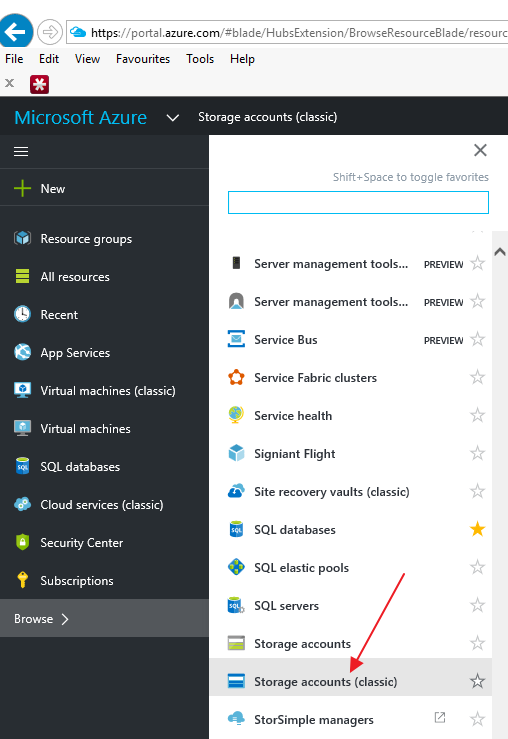

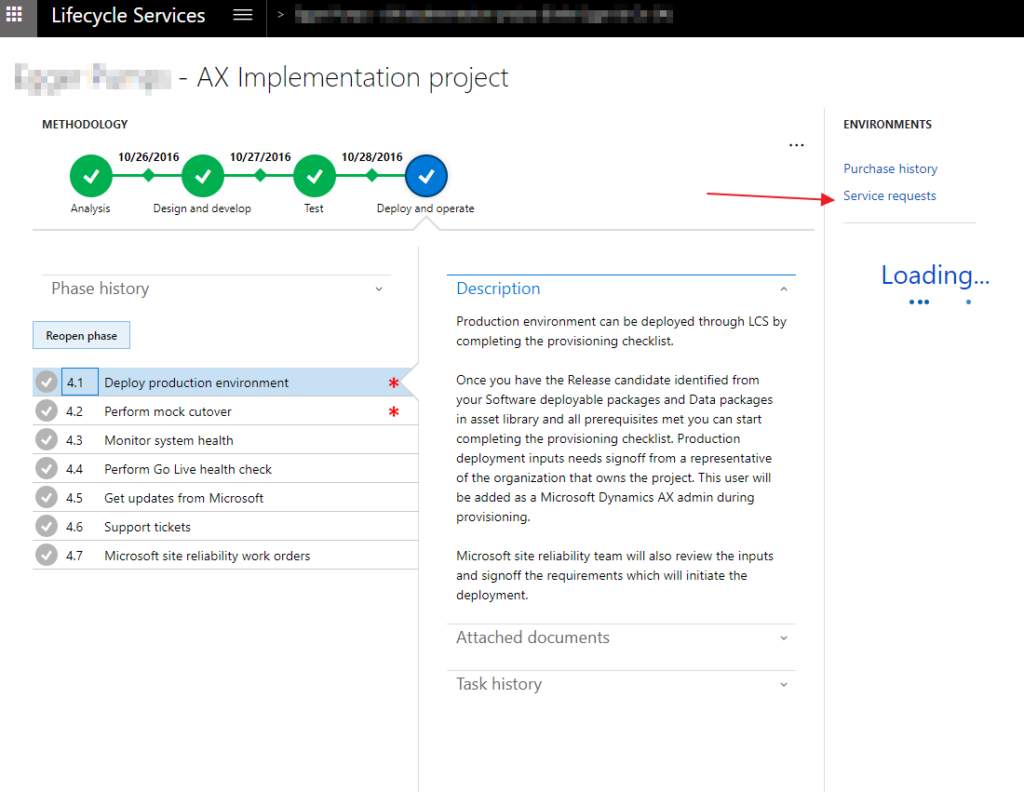

Connect to LCS with admin credentials : https://lcs.dynamics.com/

Choose the project in which your “PRODUCTION” environment is available.

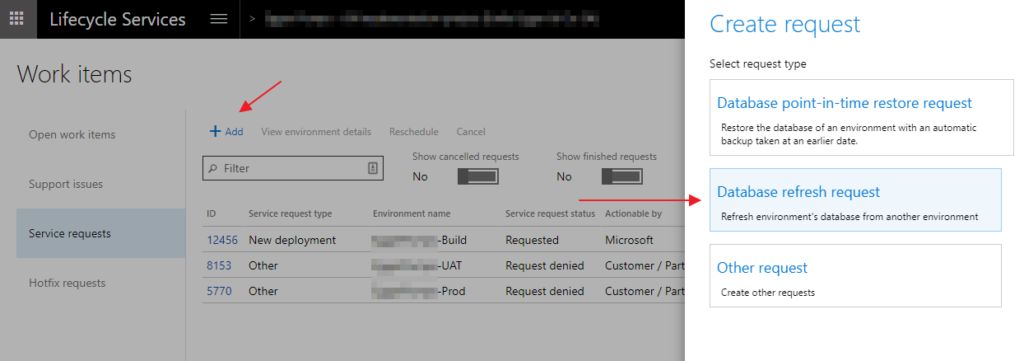

Then, click on “service requests” :

Add > Database refresh request :

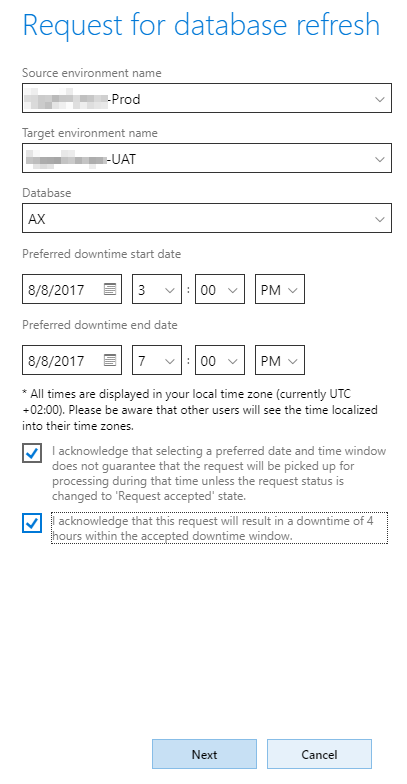

Fill-in the form :

The “downtime” is relative to UAT : your Production environment should not be affected. For safety, make sure to select times outside of intensive business hours.

…wait for Microsoft to do their job. You should receive an email once they are done. Usually, they are quite timely, the worst delay we had was 3 hours late, as long as I remember.

Once they are finished, log in to the UAT environement, and check that the data was refreshed from prod (you should be able to do that by logging into AX web interface and checking your sales orders, for instance).

Step 2 : Prepare the database bacpac on the UAT server

Connect to one of the AOS servers in the UAT environment, or to the BI server with RDP (you will find credentials on LCS, just make sure that your firewall allows outgoing connections to the server). Note that lately, I could not connect to BI servers using RDP, but any AOS will make the trick.

Step 2.1 : Copy the scripts on the UAT server [2 min]

Copy the uat-scripts folder (from the downloaded .zip referenced previously in this post) on your server’s desktop (or any better location).

Step 2.2 : (optional) Re-enable all users on UAT [2 min]

The database refresh has disabled all users but the admin. If, like us, you want to give access to all users to your UAT environment for training and testing purposesyou can do it easily with a single query.

For this step, you can execute script 100_restore-uat-settings-after-refresh.sql : this is an sql script, run it using SQL Management Studio. The credentials you need in order to connect to your database are available on LCS (sqladmin user). As usual : always open the script, read and adapt before you run it.

Step 2.3 : Create a temporary database [7 min]

Use script 101_copyuatdb.sql, wait for the return of the command. Since this is an asynchronous job, you don’t know if it is finished or not.

That’s why you can use script 102_monitorcopy.sql (optionnal).

Once the resultset of 102_monitorcopy.sql is empty, you can go on with script 103_preparecopy.sql : please note that you have to execute the command on the newly created database (the script will prevent unintended execution on the wrong db). This script should execute fairly quickly (a few minutes at most).

Step 2.4 : Create bacpac file [1-2 hours+]

This step is quite easy : just adapt and run script 104_exportdb.ps1 on a powershell console.

…don’t despair and do something else with your life : this command is extremely long (I had enough time to write this post while it ran…). Expect at least 1 hour wait.

In the meantime, you can create the storage account if you don’t have it yet.

Step 3 : (do only once, optional) Setup a blob storage to transfer the file

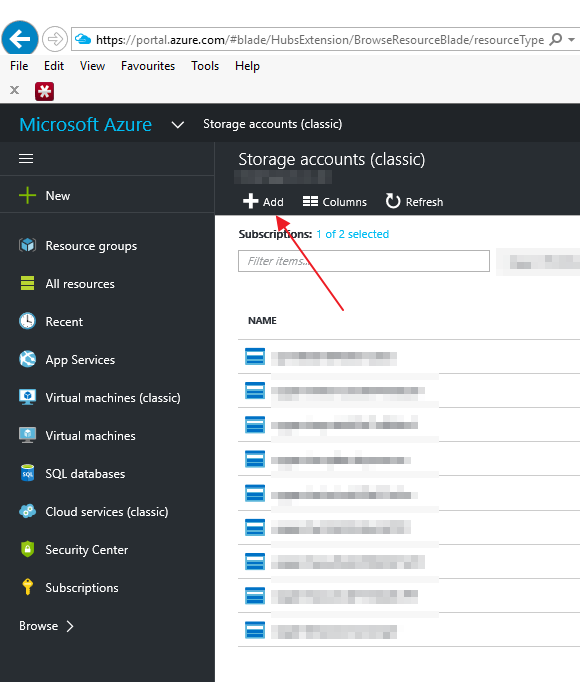

Step 3.1 : Create a storage account [20 min]

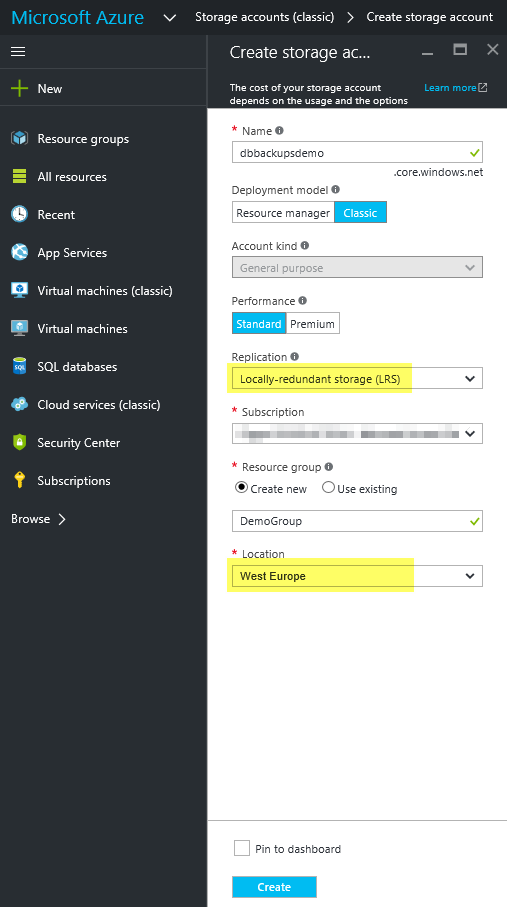

Connect to https://portal.azure.com/, and go to “storage” (it works just as fine with the old Azure portal).

Then, add an account (unless you already have a suitable storage account, of course).

Give it a name (it has to be a unique subdomain, but the name itself is not relevant : try until you find something available).

Choose the replication you want : if like me you just want a “temporary” storage, choose the weakest redundancy possible, in order to reduce the cost (I chose Locally Redundant). You can create a new resource group or just use an existing group, again, this is not a big deal : it is just a way to group your resources.

The location is the most important setting : you don’t want to transfer large amount of data across the globe just for database backup. Think about the rainforest and about your wallet! In my case, since my database server is located in “West Europe”, I chose a storage in the same location.

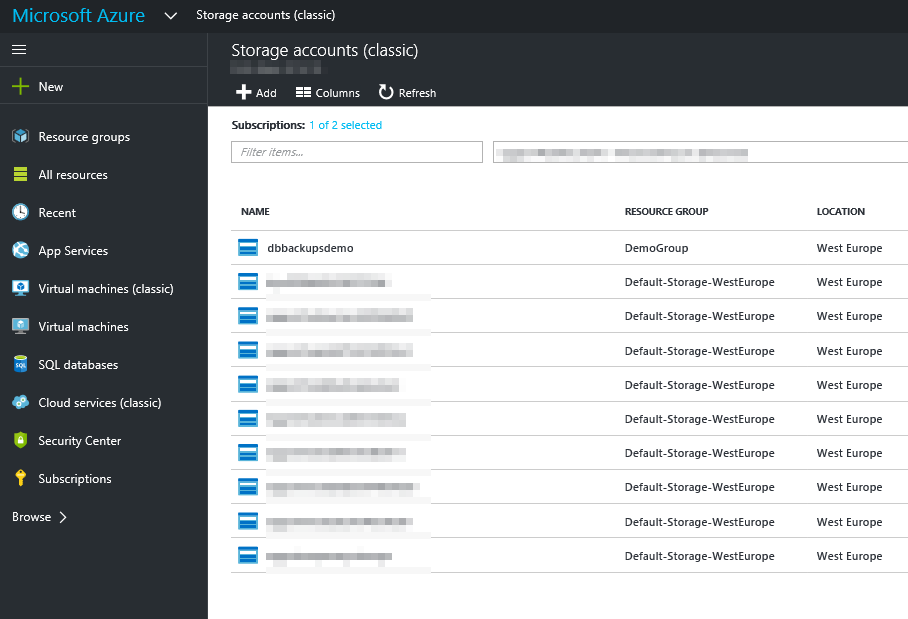

After a few minutes, your new account should appear in the list :

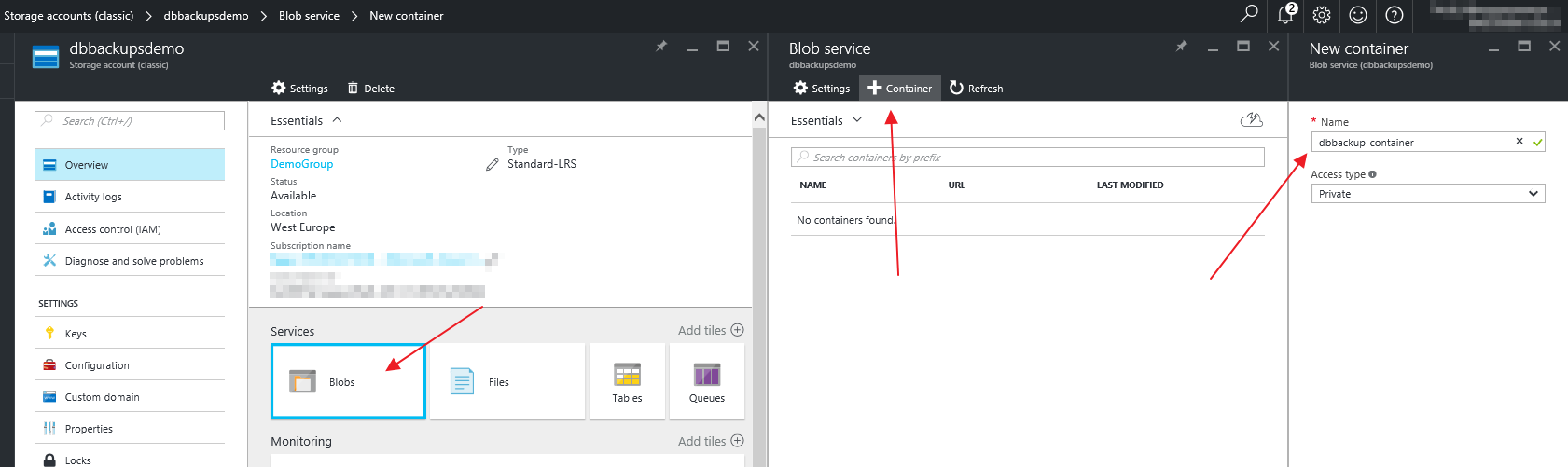

Step 3.2 : Create a container in the storage account [1 min]

You can now click on the storage account, and create a container :

Leave “Private” in the “New container” dialog (as there is no reason to grant access to the world to your database backup).

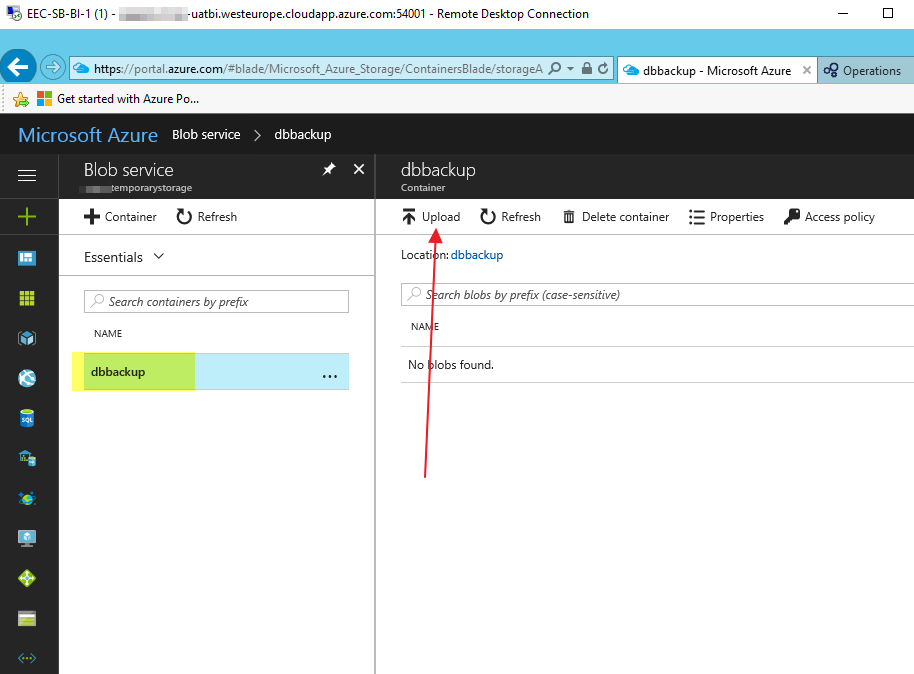

Step 4 : Transfer the file [10 min]

Do this step only after the command from step 2.4 is finished, from the UAT server.

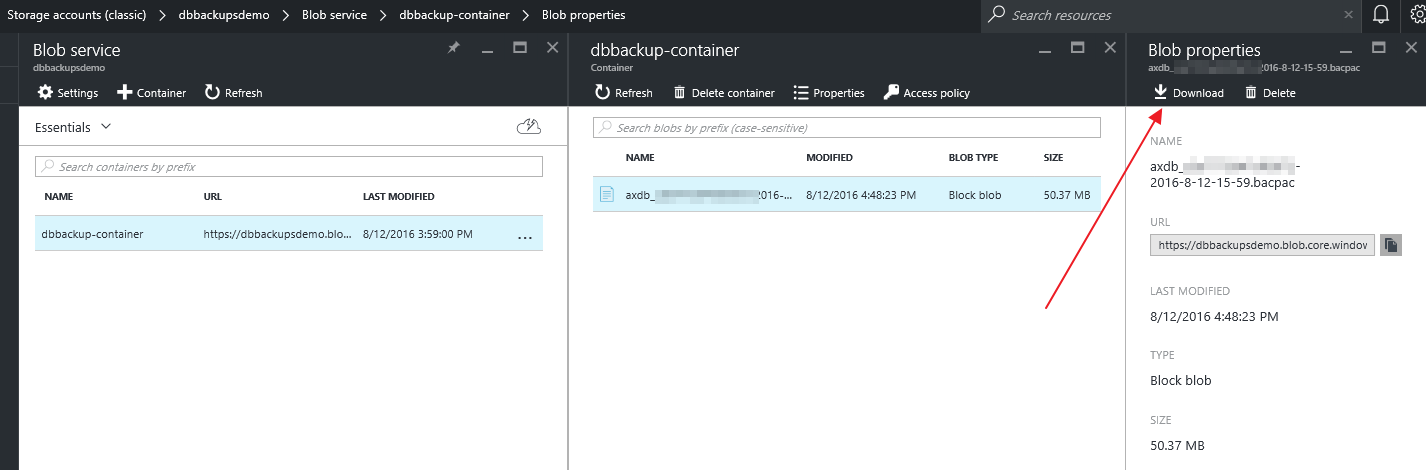

Upload the bacpac file (should be in D: see last script) to the blob storage (note that you any other means to transfer the file is OK).

Download the bacpac file directly on the machine where you want to restore your backup. As the bacpac is compressed, it should not be too long. My database was 3+ GB, and compressed with all the data, it is only 50 MB.

Note that the downloaded file has extension .zip : just rename it to .bacpac.Step 5 : Restore the database

There are many ways to restore an Azure database to another server, but the other solutions I tried failed. In particular, trying to restore the backup “in-band” with SQL Server Management Studio (SSMS) and the storage access keys took more than 10 hours, just to fail at the end…

Step 5.1 : Copy the scripts on the target server [2 min]

Copy the target-scripts folder (from the downloaded .zip referenced previously in this post) on your target server’s desktop (or any better location).

Step 5.2 (optional, do only once) Get SqlPackage.exe [20 min]

SqlPackage.exe should already be available on the target server. If it is not the case, follow the steps below to get it.

In order to restore your bacpac, you will need the latest version of SqlPackage.exe : to do so, you can simply install the latest version of SSMS :

MSDN SQL Server Management Studio download

If you run in trouble with an exception like :

“Internal Error. The internal target platform type SqlAzureV12DatabaseSchemaProvider does not support schema file version ‘3.3’”

(that’s what happened to me on the one-box AX7 DEV environment), then just install the latest version of SSMS.

If you try to restore from the SSMS user interface (Import Data-Tier Application) you might end up with an out of memory error…

Another way to solve the out of memory problem might be to right-click on the server in SSMS > Properties > Memory…

The safest and quickest way I found to restore the database was just to use SqlPackage.exe in command line.

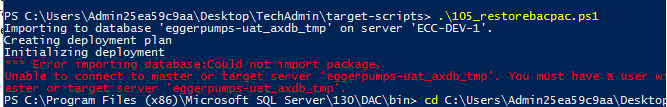

Step 5.3 Import the database

Review and run script 105_restorebacpac.ps1 in a powershell command line. Read below for command line details.

PS C:\Program Files (x86)\Microsoft SQL Server\130\DAC\bin> .\SqlPackage.exe /a:Import /sf:"C:\Users\axlocaladmin\Downloads\axdb_XXX-2016-8-11-15-11.bacpac" /tdn:axprod20160811 /tsn:TEST-1

/tdn is the name of the database to which you want to restore the file (pick any name you want)

/tsn is the SQL server name

…again, this process is long (but very fast compared to the other methods I tried).

If you end up with this error :

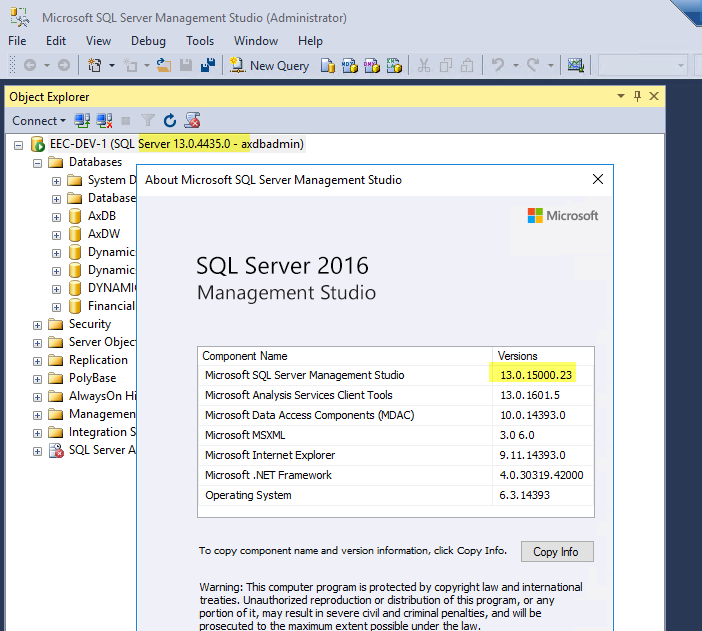

…just go back to point 5.2, you probably have an old version of SSMS. Mine was :

…and 17.3 is the current version.

Step 5.4 (Optional) Replace AX database

This step must be performed only if you intend to replace an existing AX database (swap an environement data content).

Review and run commands on script 106_singleuser.sql

Step 5.5 (Optional) Check copy results

Finally, you can check the content of your database against the source database, for instance by using this query and comparing with the source db :

SELECT TOP 20

t.[name] AS TableName,

p.[rows] AS NbRows

FROM

sys.tables t

INNER JOIN

sys.indexes i ON t.object_id = i.object_id

INNER JOIN

sys.partitions p ON i.object_id = p.object_id AND i.index_id = p.index_id

WHERE

t.[name] NOT LIKE 'dt%' AND

i.[object_id] > 255 AND

i.[index_id] <= 1

GROUP BY

t.[name], i.[object_id], i.[index_id], i.[name], p.[rows]

ORDER BY

p.[rows] DESC

Dear Daniel,

Yes, there are further steps, but that’s feasible.

Let me check with MS if they let me share this information, I’ll come back with the reply (and let’s hope the information) in a few days.

Have a nice day!

Hi Daniel,

Good job!

Unfortunately, no news yet from MS, but they are discussing it.

Another tip : the machine may be very slow, once you have restored the database. You have to tweak the enabled services. Once you disable those you don’t need, it is fast as lightning.

Have a nice day!

Good news! Tariq has released an official procedure 🙂

https://ax.help.dynamics.com/en/wiki/copy-an-ax-database-from-azure-sql-database-to-sql-server/

And another very useful article :

https://ax.help.dynamics.com/en/wiki/debug-an-x-issue-against-a-copy-of-production/

Just the AX piece missing, but I know they are working on it.

Oooops, I forgot to reply to your comment! We did not need all the services related to retail because we do not use POS and Retail. But in fact, that was Davy, from Microsoft, who did the great job of figuring what services could be stopped.

I updated the post to better reflect the procedure and provide a few scripts, now that Microsoft has given all the necessary details.

I am unable to connect to UAT Database through SSMS in UAT AOS server

Dear Velan,

In LCS, you can find the sql server, database name, username and password: under “database accounts”.

Do not forget to install the latest version of SSMS and restart the server, as mentioned in the prerequisites : https://docs.microsoft.com/…

Hi Raphael,

I have exported my .bacpac file from the UAT and trying to import it into my vm using the below code. The existing DB name in my vm is AxDB and the name of the bacpac file is Test.bacpac

SqlPackage.exe /a:import /sf:C:\Temp\test.bacpac /tsn:xxxxxxxx /tdn:AxDB /p:CommandTimeout=1200. But i ran into the below error and dont know what went wrong.

Data cannot be imported into target because it contains one or more user objects. Import should be performed against a new, empty database.

Hi Konda,

It looks like you are trying to import against a database that already contains objects.

Maybe you could try to delete the target database and recreate it?

Best of luck!